LLMs were made to be tools of knowledge and intelligence, not to search through messy databases. The current Retrieval Augmented Generation (‘RAG’) tooling that exists expects LLMs to be experts at search, and it consequently fails to achieve results. Fundamentally, RAG is an overwhelming task for an LLM, the attempt to find truth in data devolves as more context of ‘similar chunks’ are shoved into a transformer which degrades the quality of the attention ranking mechanism

How can truth be found in data without search? It can’t. Search is an unavoidable paradigm in dealing with the real-world and real business use-cases for LLMs. Information that hasn’t been trained into an LLM must somehow be brought into the purview of it; however, the static weights applied to the transformer architecture define a model’s capabilities. It is these weights that ‘weigh down’ the search mechanism. New unknown information suffers as an infinite regress develops where each additional token that isn’t the ground truth of a query further starves the transformer of attention to the truth

RAG is thus both a sickness and a cure to models. Database structuring begins to attempt to solve this issue but fails when it mistakes the contents of reasoning (context) with the actual process of reasoning. Vector stores that measure the distance between words and find similarities using nearest neighbor algorithms aren’t coterminous with actual reasoning. Even specialized ‘embeddings’ can only capture certain keywords. This is why vector stores fail to achieve actual search-based reasoning and we have proclamations of the ‘failure of RAG’

‘Agentic’ search has been presented as a solution to the RAG paradigm. Here, rather than vectors powering search, smaller agents must comb through data to ingest, summarize and present it to a ‘master’ model. This search process may sound appealing but devolves into essentially the same challenge of vector-based search, except instead of keyword embeddings powering the search algorithm, it is agents. RAG is unavoidable in the basic sense that retrieval must augment the generative knowledge of a model. The core challenge of RAG, however, still remains though, truth and reasoning are not the results of either keywords or ‘agents’ presenting similar conceptual chunks into a context window

Truth is the synthesis of context and reasoning. Truth requires context - context rightly delivered and presented, concisely and apparently, to an attentive model. When attention is overwhelmed, synthesis is impossible. When context is missing, hallucination is the result. What is needed to enable search-based reasoning is not simply chunks of similar information or relevant concepts but a fulsome ETL that can Extract the truth a model needs, Transform it into concise, legible formats and Load it into the proper context conducive for reasoning

The gordian knot of the search-mechanism and the fundamental architecture of a transformer is circumvented by an ETL, as an ETL doesn’t allow a model to ‘search’ for data but rather ‘delivers’ the exact contents needed to the model. An ETL is a complement to a model, an assistant in its reasoning. The challenge with this ETL model for LLM reasoning is that most of the data LLMs need to reason over isn’t already in structured formats which would be accessible via traditional ETL architecture. Most of the data LLMs need is trapped within unstructured, unavailable formats

A new ETL is thus needed

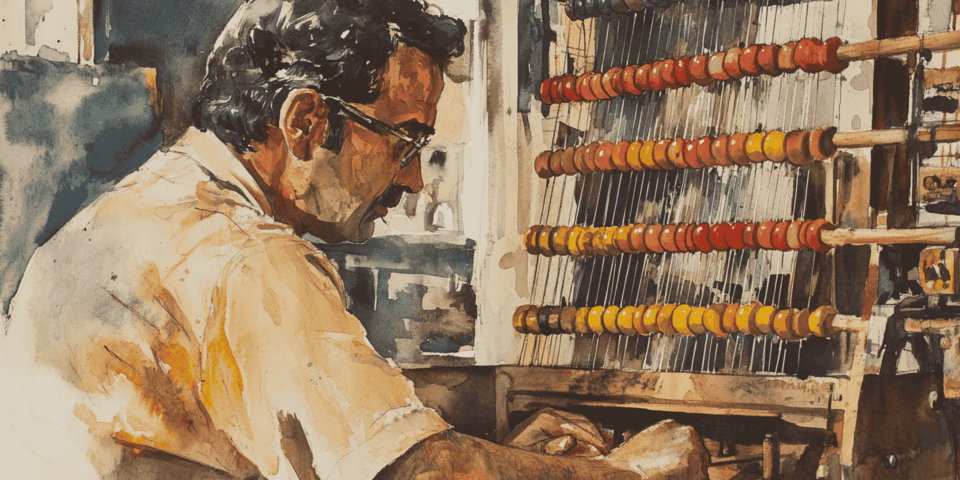

Gestell is a new ETL for LLMs - a comprehensive data structuring system that transforms unstructured data into AI-ready databases. Gestell covers the entire process from Enframing to Disclosure, enabling accurate, scalable search-based reasoning for your language models

Gestell solves the RAG problem by disempowering the model from doing search in favor of an ETL providing the exact information the model needs to make a decision. Our ‘search’ process then is not comparable to normal ‘retrieval’ processes which attempt to ‘find’ chunks of similar context within a database, but rather is a method of ‘delivering’ truth to a model that can then think about the data and come to a decision. What we call Disclosure (search-based reasoning) is in fact the limiting of the information delivered to a model, such that the exact context is given to a model so that it can reason effectively about it. Gestell prioritizes attention and context as a means of enabling reasoning

Structure unstructured data with enhanced LLM-based vision

Organize frames into structured hierarchies and relational maps of meaning

Represent and manage canons while maintaining their complex context

Processes, reasons and searches through data for complex queries and prompts

1. Integrated ETL: Simply upload your data, in any format, across any discipline and let Gestell handle the rest from Enframing to Disclosure

2. Customizable: Using natural language, instruct how you want Gestell to structure your data

3. Scalable, immediately: Gestell is the only solution for search-based reasoning on the market proven to scale beyond 50k pages, and is ready to deploy immediately

4. Cost Efficient: Gestell is ~40% cheaper than solutions like Google’s Document AI (which only has pdf parsing). Gestell’s cheaper pricing is also entirely inclusive of every filetype and format (images and video included) while also serving to structure the data being processed

We often hear from users that they have either relied on AI workspaces (for example: AWS or Google Vertex) or have built their products upon point solutions like traditional vector stores

Mainstream AI workspaces exemplify the current RAG paradigm; they enable simple data upload and then naively throw everything into a vector store, expecting quality results from search. The challenge of the AI workspaces is that they show the appearance of competency at search-based reasoning through ease-of-use. These tools almost all enable no-code deployments and plentiful connectors to bring data into a platform; however, their architecture is fundamentally flawed resulting in failure at production-level deployment of search-based reasoning

Outside of AI workspaces, many people opt to build their own data processing, structuring and search systems. These systems are made up of individual tools or point solutions. Point solutions like vector stores or knowledge graphs have all attempted to enable deeper complexity or higher performance search through specializing a specific part of the ‘RAG’ stack. Companies that don’t rely on AI workspaces will often cobble together 5+ point solutions to build search-based reasoning. There are two primary challenges with point solutions: they weren’t made with other solutions in mind and they take a long time to deploy in tandem. Since they specialize one part of the stack they fail to converse eloquently with other parts of search architecture, resulting in over-emphasis. For example, some RAG tooling may excel at single-document retrieval but fail when you add additional data or different formats. Additionally, these tools all take months of engineering work to actually deploy, with no guarantee of scalable results. A demo might be able to be pieced together using these tools, but production-scale real-world use-cases are often unable to be shipped

Below is a comparison table of Gestell vs. the competition:

Gestell Gestell |  Pinecone Pinecone |  Chroma Chroma |  Google Google |  AWS AWS |  Databricks Databricks |  Snowflake Snowflake |  Cohere Cohere | |

|---|---|---|---|---|---|---|---|---|

Integrated ETLEnd-to-end from Enframing to Disclosure | ||||||||

Ingestion - ParsingSystematic breakdown and analysis of input data | ||||||||

Ingestion - Intelligent ChunkingLLM-enabled segmentation for search optimization | ||||||||

Ingestion - Multi-modalHandles diverse data types simultaneously | ||||||||

Customizable, specialized vector storeTailored database optimized for vector embeddings | ||||||||

Integrated Knowledge GraphsInformation network showing contextual relationships | 1 | |||||||

Re-rankersRefined search result prioritization system | ||||||||

Accurate ScalabilityMaintains precision while handling increasing data volumes (50k+ pages) | ||||||||

Feature ExtractionIdentifies and isolates key data characteristics | ||||||||

Table Structuring and ExtractionOrganizes and retrieves tabular information effectively | ||||||||

RLHF enablementSupports human feedback for model improvement | ||||||||

Full pipeline structuring optimizationEnd-to-end process refinement for maximum efficiency across the stack | ||||||||

Code / No-CodeFlexible implementation across technical expertise | BOTH | CODE | BOTH | CODE2 | BOTH | BOTH | BOTH | CODE |

Model-AgnosticWorks with any AI framework or model | ||||||||

7 day a week supportWhite-Glove support - you will have the founder's phone number |

Reach out, Gestell is ready to deploy today